Problems with "bursty" traffic on long distances

A well-known problem that slows down long-distance TCP traffic unreasonably much is packet losses. One of the reasons of packet losses is that packets are sent "burst-wise" during the startup phase of a TCP connection. This in turn causes packets to be lost only during the peeks of the bursts, and because of the short period a burst is active the effective bandwidth will be much lower. This is important when the connection have a long round-trip-time (RTT).

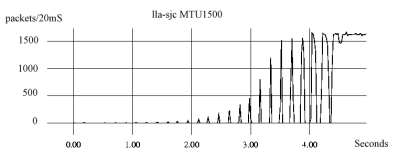

The graph below shows the burstiness when a TCP connection is established between machines in Luleå, Sweden and San Jose, CA, with a RTT of about 175 milliseconds. In this case there are no packet losses, so the connection will after ~5 seconds reach it's full speed (1 Gigabit/sec).

We can here clearly see the peaks in the sent traffic. A simple calculation shows the problem: if we assumes that the outgoing link is limited to 1000 packets/20 milliseconds, then the first batch of packets will be dropped when the peek passes 1000.

If we could sustain this packet rate then we would have a bandwidth of 1500*1000*8*50 = 600 Mbit/s, but because of the burstiness the packet drops will occur already at 1500*1000*8*(1/.175) = 68.5 Mbit/s.This is only part of the truth; when packets are dropped the send rate of packets will be significantly reduced.

If we could sustain this packet rate then we would have a bandwidth of 1500*1000*8*50 = 600 Mbit/s, but because of the burstiness the packet drops will occur already at 1500*1000*8*(1/.175) = 68.5 Mbit/s.This is only part of the truth; when packets are dropped the send rate of packets will be significantly reduced.

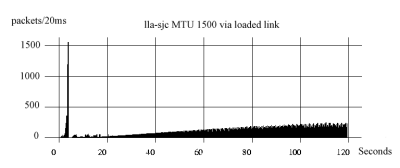

This graph shows what actually happens on a Gigabit link with around 100 Mbit/sec background noise.

As we can se here the transmission stops when the outgoing connection overflows.

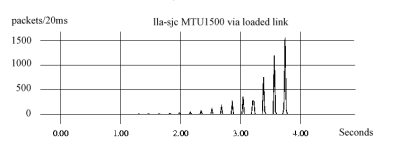

In reality, it do not stop totally, but the amount of sent packets are so small that the graph can't show the traffic. What happens is that the sender enters slow-start mode, and the outgoing traffic speed increases very slowly. Slow-start after this large packet loss will be very slow, as shown in the figure below.

In reality, it do not stop totally, but the amount of sent packets are so small that the graph can't show the traffic. What happens is that the sender enters slow-start mode, and the outgoing traffic speed increases very slowly. Slow-start after this large packet loss will be very slow, as shown in the figure below.